Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

Loading...

We started this project with a simple assumption. Solving a maze is an easy task. If you enter a maze and place your hand on one wall of the maze and traverse the maze without ever removing your hand you’ll reach the exit. What happens though if the maze is… dynamic? How would you solve a maze that shifts and changes? The problem is itself intractable. There is no guaranteed way to solve such a maze, but fundamentally we believe that a brute force algorithm is a poor solution to the problem and with the possibility of cycles arising in such a maze; sometimes not a solution at all. We believe that in a dynamic maze it is possible to come up with a solution that given an infinite amount of time, can solve the maze by using knowledge about the structure of the maze as a whole.

This problem essentially is a simplification of a much more complicated problem in robotics: given a starting point and a known endpoint with an unknown location in an unknown environment, navigate from point A to point B with the added complication that the environment itself is not consistent. Think something comparable to a delivery robot being dropped off at an active construction site. There is no time to stop construction to allow the robot to scan and build a map of the site. There are going to be constantly moving vehicles and materials around the site. A worker tells the robot to go to the manager’s office and gives the robot some description to identify it. The robot must get from where it is to the office despite the scenario it is placed in. This is essentially the problem we are solving.

Problem: Develop an algorithm to autonomously navigate and solve a dynamically changing maze.

Explore the maze and build a representation of it as a graph data structure with intersections as nodes and distances between them as edges

Create a navigation system based on this graph representation to travel effectively around the maze, updating the graph as changes are detected in real-time. Essentially developing our own SLAM algorithm. (SLAM being an algorithm than automates navigation based on a known map)

A Helpful Resource for Overall Maze Methodology: https://www.pololu.com/file/0J195/line-maze-algorithm.pdf

Some Help With Tuning Your PID: https://www.crossco.com/resources/technical/how-to-tune-pid-loops/

Lidar Methodology: https://github.com/ssscassio/ros-wall-follower-2-wheeled-robot/blob/master/report/Wall-following-algorithm-for-reactive%20autonomous-mobile-robot-with-laser-scanner-sensor.pdf

Use: All that is needed to use the code is to clone the github repository and put your robot in a maze!

From a birds eye view, the solution we came up with to solve a dynamic maze mirrors a very interesting problem in graph algorithms. With the assumption that the key to our solution lies in building an understanding of the structure of the maze as a whole, we build a map on the fly as we traverse the maze. We represent the maze as a graph data structure, labeling each intersection in the maze we reach as a node and each edge as the distance between these intersections in the maze. We create these nodes and edges as we encounter them in our exploration and delete nodes and edges as we come across changes in the maze. This problem can then be seen in actuality as a graph algorithms problem. Essentially we are running a graph search for a node with an unknown entry point into an unknown graph that itself is unreliable or rather, “live” and subject to modification as it is read. There are then 2 stages to solving this problem. The first is learning the graph for what it is as we explore, or rather create a full picture of the graph. Once we have explored everything and have not found the exit we know searching for the exit is now the problem of searching for change. The crux of solving this problem is characterizing the changes themselves. It is by understanding how changes take place that we devise our graph search from here to find these changes. For example, a graph whose changes occur randomly with equal probability of change at each node would have an efficient search solution of identifying the shortest non cyclical path that covers the most amount of nodes. The change behavior we used for our problem was that changes happen at certain nodes repeatedly. To solve the maze, we then use the previous solution of searching the most nodes as quickly as possible for changes if we fully explore the maze without identifying anything, then once a changing node is found we label it as such so we can check it again later, repeating these checks until new routes in the maze open up.

As a note, some simplifications that we use for time’s sake: Width of corridors in the maze are fixed to .5m Turns with always be at right angles

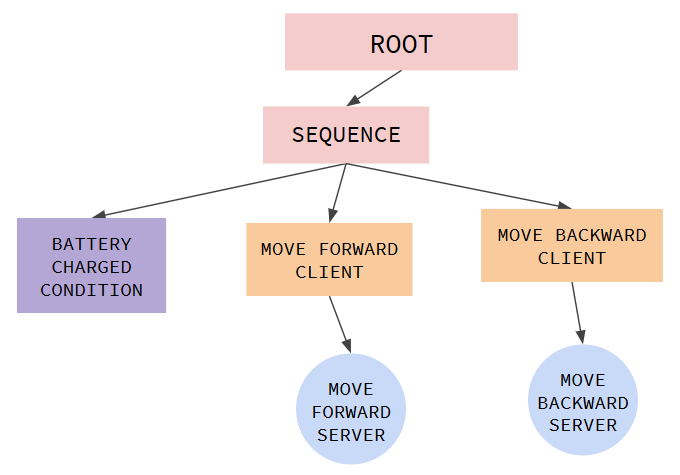

We designed an intelligent maze-solving robot navigation system that employs a depth-first search (DFS) algorithm for effective graph traversal. Our solution is structured into two primary components: laserutils and pid_explore, which work together seamlessly to facilitate the robot's navigation and exploration within the maze.

The laserutils module is a collection of essential utility functions for processing laser scan data and assisting in navigation. It calculates direction averages, simplifies 90-degree vision frameworks, detects intersections, and implements a PID controller to maintain a straight path. Moreover, it incorporates functions for converting yaw values, managing node formatting, and handling graph data structures, ensuring a streamlined approach to processing and decision-making.

The pid_explore module serves as the core of the robot's navigation system. It subscribes to laser scan and odometry data and employs the laserutils functions for efficient data processing and informed decision-making. The robot operates in three well-defined states: "explore," "intersection," and "position," each contributing to a smooth and strategic navigation experience.

In the "explore" state, the robot uses the PID controller to navigate straight, unless it encounters an intersection, prompting a transition to the "intersection" state. Here, the robot intelligently labels the intersection, assesses the path's continuation, and determines its next move. If the robot is required to turn, it shifts to the "position" state, where it identifies the turn direction (left or right) and completes the maneuver before resuming its straight path.

Our navigation system employs a Graph class to store and manage the maze's structure, leveraging the power of the DFS algorithm to find the optimal path through the maze. The result is a sophisticated and efficient maze-solving robot that strategically navigates its environment, avoiding any semblance of brute force in its problem-solving approach.

We built this project to address the challenges faced by robots in navigating unpredictable and dynamic environments, such as active construction sites or disaster zones. Traditional maze-solving algorithms, based on brute force and heuristics, are inadequate in such situations due to the constantly changing nature of the environment.

Our goal was to develop an intelligent navigation system that could adapt to these changes in real-time and continue to find optimal paths despite the uncertainties. By creating a program that can autonomously drive a robot through a changing maze, we aimed to advance robotic navigation capabilities and make it more efficient and useful in a wide range of applications.

With our innovative approach of "on the fly" map creation and navigation, we sought to enable robots to traverse dynamic spaces with minimal prior knowledge, making them more versatile and valuable in industries that require real-time adaptability. By simultaneously building and updating the map as the robot navigates, we ensured that the robot could rely on its current understanding of the environment to make informed decisions.

Ultimately, we built this project to push the boundaries of robotic navigation, opening up new possibilities for robots to operate in complex, ever-changing environments, and offering solutions to a variety of real-world challenges.

The project began our first week with a theoretical hashing out of how to approach the problem and what simplifications to make. We started by learning how to build simulations in Gazebo and implementing our Lidar methods. We then were able to create simple demos of an initial explore algorithm we created with the “explore”, “intersection”, “position” approach. Gazebo issues were encountered early on and were largely persistent throughout the course of the project with walls losing their physics upon world resets. At this point we built larger models in Gazebo to test with and implemented a PID algorithm to smooth the robot’s traversal. This PID would go through several versions as the project went on especially as we tuned navigation to be more precise as we adjusted our hall width from 1 meter down to .5. We then formulated and integrated our graph data structure and implemented a DFS algorithm to automatically find paths from node to node to navigate. We then added node labeling and cycle detection to our graph to recognize previously visited nodes. One of our group member’s github became permanently disconnected from our dev environment in the last stage of the process but all challenges are meant to be overcome. We finished by accounting for dynamic change in our algorithm and designed a demo.

Despite having trouble in our Gazebo simulations we were surprised to see that our algorithm worked well when we moved our tests over to the physical robots. PID especially, which had proven to be trouble in our testing in fact worked better in the real world than in simulations. There were a few corners cut in robustness (ie: with the simplifications agreed upon such as only right angle turns), but overall the final product is very adaptable within these constraints and accounts for a number of edge cases. Some of the potential improvements we would have liked to make is to expand past these simplifications of only having right-angled intersections and limited hall width. The prior would greatly change the way we would have to detect and encode intersections for the graph. A next algorithmic step could be having two robots traverse the maze from different points at the same time and sharing information between each other. This would be the equivalent of running two concurrent pointers in the graph algorithm. Overall, though an initially simple project on the surface, we believe that the significance of this problem as a graph algorithms problem not only makes it an important subject in robotics (maze traversal) but also an unexplored problem in the larger world of computer science; searching an unreliable/live modifying graph.

Efficiently search our graph for the exit to the maze its dynamic property making the graph unreliable

Entity Used for Consulting: https://chat.openai.com

laserUtils.py

Process Lidar Data

pid_explore.py

Navigating Maze

Naimul Hasan (naimulhasan@brandeis.edu | LinkedIn) Jimmy Kong (jameskong@brandeis.edu | LinkedIn) Brandon J. Lacy (blacy@brandeis.edu | LinkedIn)

May 5, 2023

“You can’t be an expert in everything. Nobody’s brain is big enough.” A quote that’s been stated by Pito at least twice this semester and once last semester in his software entrepreneurship course, but one that hadn’t truly sunk in until the time in which I, Brandon, sat down to write this report with my teammates, Naimul and Jimmy.

I’ve greatly enjoyed the time spent on group projects within my professional experiences, but always erred on the side of caution with group work in college as there’s a much higher chance an individual won’t pull their weight. It’s that fear of lackluster performance from the other team members that has always driven me to want to work independently. I’d always ask myself, “What is there to lose when you work alone?

You’ll grow your technical expertise horizontally and vertically in route to bolster your resume to land that job you’ve always wanted, nevermind the removal of all of those headaches that come from the incorporation of others. There is nothing to lose, right?”

Wrong.

You lose out on the possibility that the individuals you could’ve partnered with are good people, with strong technical skills that would’ve made your life a whole lot easier throughout the semester through the separation of duties. You lose out on a lot of laughs and the opportunity to make new friends.

Most importantly, however, you lose out on the chance of being partnered with individuals who are experts in areas which are completely foreign to you.There is nobody to counter your weaknesses. You can’t be an expert in everything, but you can most certainly take the gamble to partner with individuals to collectively form one big brain full of expertise to accomplish a project of the magnitude in which we’ve accomplished this semester.

I know my brain certainly wasn’t big enough to accomplish this project on my own. I’m grateful these two men reached out to me with interest in this project. Collectively, we’ve set the foundation for future iterations of the development of campus rover and we think that’s pretty special considering the long term impact beyond the scope of this course. So, I guess Pito was right after all; one brain certainly isn’t big enough to carry a project, but multiple brains that each contribute different areas of expertise. Now that’s a recipe for success.

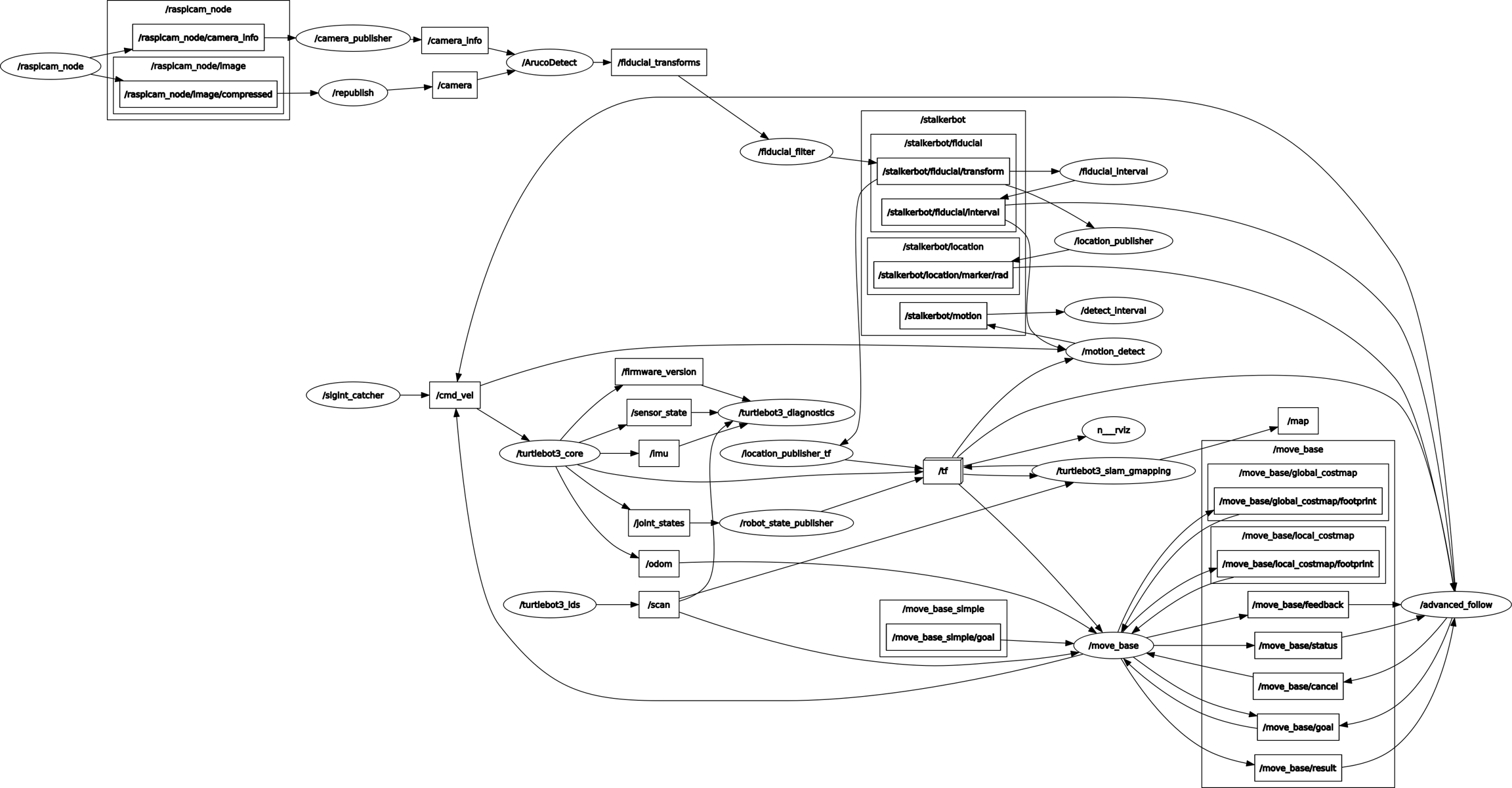

The command and control dashboard, otherwise known as the campus rover dashboard, is a critical component to the development of the campus rover project at Brandeis University as it’s the medium in which remote control will take place between the operator and the robot. It is the culmination of three components: a web client, robot, and GPS. Each component is a separate entity within the system which leverages the inter-process communications model of ROS to effectively transmit data in messages through various nodes. There are various directions in which the campus rover project can progress, whether it be a tour guide for prospective students or a package delivery service amongst faculty. The intention of our team was to allow the command and control dashboard to serve as the foundation for future development regardless of the direction enacted upon in the future.

The front-end and back-end of our web client is built with React.js. The app.js file is used to manage routes and some React-Bootstrap for the creation of certain components, such as buttons. The code structure is as follows: app.js contains the header, body, and footer components. The header component hosts the web client’s top-most part of the dashboard which includes the name of the application with links to the different pages. The footer component hosts the web-client’s bottom-most part of the dashboard which includes the name of each team member. The body component is the meat of the application which contains the routes that direct the users to the various pages. These routes are as follows: about, help, home, and settings. Each of these pages utilize components that are created and housed within the rosbridge folder. You can think of them as parts of the page such as the joystick or the video feed. The ros_congig file serves as the location for default values for the settings configurations. We use local storage to save these settings values and prioritize them over the default values if they exist.

ROSBridge is a package for the Robot Operating System (ROS) that provides a JSON-based interface for interacting with ROS through WebSocket protocol (usually through TCP). It allows external applications to communicate with ROS over the web without using the native ROS communication protocol, making it easier to create web-based interfaces for ROS-based robots. ROSLIBJS is a JavaScript library that enables web applications to communicate with ROSBridge, providing a simple API for interacting with ROS. It allows developers to write web applications that can send and receive messages, subscribe to topics, and call ROS services over websockets. ROSBridge acts as a bridge between the web application and the ROS system. It listens for incoming WebSocket connections and translates JSON messages to ROS messages, and the other way around. ROSLIBJS, on the other hand, provides an API for web applications to interact with ROSBridge, making it easy to send and receive ROS messages, subscribe to topics, and call services. In a typical application utilizing ROSBridge and ROSLIBJS, it would have the following flow:

Web client uses ROSLIBJS to establish a WebSocket connection to the ROSBridge server, through specifying a specific IP address and port number.

The web client sends JSON messages to ROSBridge, which converts them to ROS messages and forwards them to the appropriate ROS nodes.

If the node has a reply, the ROS nodes send messages back to ROSBridge, which converts them to JSON and sends them over the WebSocket connection to the web client.

ROSLIBJS API processes the incoming JSON messages so it can be displayed/utilized to the web client

GPS, or Global Positioning System, is a widely-used technology that enables precise location tracking and navigation anywhere in the world through trilateration, a process which determines the receiver’s position by measuring the distance between it and several satellites. Though it has revolutionized the way we navigate and track objects, its accuracy can vary depending on a variety of factors that are beyond the scope of this paper. However, there are various companies which have embraced the challenge of a more accurate navigation system that incorporates other data points into the algorithm responsible for the determination of the receiver position. Apple is notorious for the pin-point accuracy available within their devices which incorporate other sources such as nearby Wi-Fi networks and cell towers to improve its accuracy and speed up location fixes. The utilization of a more sophisticated location technology is critical for this project to be able to navigate routes within our university campus. Therefore, we’ve chosen to leverage an iPhone 11 Pro placed on our robot with the iOS application GPS2IP open to leverage the technology available in Apple’s devices to track our robot’s movement.

GPS2IP is an iOS application that transforms an iPhone or iPad into a wireless GPS transmitter over the network. It possesses various features such as high configurability, background operation on the iPhone, and standard NMEA message protocols. There is a free lite version available for testing and system verification as well as a paid version with all available features.

Nodes

/gps

The node’s purpose is to listen to GPS2IP through a socket and publish the data as a gps topic. The GPS2IP iOS application provides the functionality of a portable, highly sophisticated, GPS receiver that provides a very accurate location when the iPhone is placed on the robot. The GPS data is sent as a GLL NMEA message from a given IP address and corresponding port number. The /gps node leverages the socked package in Python to listen to the messages published at that previously emphasized IP address and port number. Through this connection, a stream of GLL messages can be received, parsed, and transformed into the correct format to be interpreted by the web client. The main transformation is for the latitude and longitude from decimal and minutes to decimal degrees format. The node will publish continuously until the connection through the socket is disturbed. The topic has a data type of type String, which is a serialized version of JSON through the utilization of the json package to dump the Python dictionary.

Code

img_res

The node’s purpose is to alter the quality of the image through a change in resolution. It’s done through the utilization of the OpenCV package, CV2, and cv_bridge in Python. The cv_bridge package, which contains the CvBridge() object, allows for seamless conversion from a ROS CompressedImage to a CV2 image and vice versa. There are two different camera topics that can be subscribed to depending upon the hardware configuration, /camera/rgb/image/compressed or /raspicam_node/image/compressed. Additionally, the /image_configs topic is subscribed to to receive the specifications for the resolution from the web client. A new camera topic is published with the altered image under the topic name /camera/rgb/image_res/compressed or /raspicam_node/image_res/compressed depending upon the hardware configuration. The web client subscribes to this topic for the camera feed.

Code

/rostopiclist

The node’s purpose is to retrieve the output generated by the terminal command “rostopic list” to receive a list of currently published topics. It performs this functionality through the use of the subprocess package in Python, which will create a new terminal to execute the command. The output is then cleaned to be published over the topic “/rostopic_list”. The topic has a data type of type String. It is subscribed too by the web client and parse the data based upon the comma delimiter. The content is used to create a scrollable menu that displays all of the available rostopics.

Code

Architecture

The command-control repository can be effectively broken down into six branches, with one deprecated branch, to create a total of five active branches:

main: The branch contains the stable version of the project. It is locked, which means no one can directly push to this branch without another person reviewing and testing the changes.

ros-api: The branch was initially committed to develop an API through FastAPI, but was later deprecated because the functionality could be implemented with ROSBridge and ROSLIBJS.

ros-robot: The branch focuses on the development and integration of the Robot Operating System (ROS) with a physical or simulated robot. It includes the launch files responsible for the run of the gps, dynamic image compression, and ROSBridge.

test-old-videofeed: The branch was created to test and debug the previous implementation of the dashboard, which was built five years ago. We imported the video from the old dashboard to our dashboard but later deprecated this implementation as it used additional redundant dependencies which can be implemented with ROSBridge and ROSLIBJS.

test-old-web-client: The branch is to test and understand the previous version of the web client dashboard. It helped us realize areas for improvement, such as a way to set the robot’s IP address within the web application. It also set the tone for our simplified dashboard format.

web-client: The branch is for ongoing development of the new web client. It includes the latest features, improvements, and bug fixes for the web client, which may eventually be merged into the main branch after thorough testing and review.

Contribution Policy

In this section it will guide you through the process of contributing to a GitHub repository following the guidelines outlined in the contributing.md file. This file specifies that the main branch is locked and cannot be directly pushed to, ensuring that changes are reviewed before being merged.

Update the Main Branch

Ensure you are on the main branch and pull down the new changes.

Resolve any conflicts which may arise at this time.

Create a New Branch

Create a new branch for the feature or issue and switch to it.

Verify the current branch is the correct branch before you begin work.

Build Feature

Develop the feature or fix the issue on the new branch.

Check for Conflicts within the Main Branch

Ensure there are no conflicts with the main branch prior to the creation of the pull request. Update the main branch and merge the changes into your feature branch.

Create a Pull Request (PR)

Push the changes to the remote repository and create a pull request on GitHub. Provide a clear and concise description of the purpose of the feature as well as how to test it. Ensure you mention any related issue numbers.

Request a Review

Ask another team member to review your pull request and run the code locally to ensure there are no errors or conflicts.

Merge the Branch

Once the review is complete and the changes are approved, the feature branch can be merged into the main branch through the pull request.

Installation and Run

Clone the GitHub repository which contains the web client code.

Install the necessary dependencies by running ```npm install``` in the terminal.

Start the web client by running ```npm start``` in the terminal.

Open a web browser and type in http://localhost:3000.

Navigate to the settings page on the web client to set them according to your intentions.

Web Client Settings

The IP address of the ROSBridge server. It is the server that connects the web application to the ROS environment.

Port: The port number for the ROSBridge server.

Video Resolution Width: The width of the video resolution for the ROS camera feed.

Video Resolution Height: The height of the video resolution for the ROS camera feed.

Video Frame Width: The width of the video frame for the ROS camera feed.

Video Frame Height: The height of the video frame for the ROS camera feed.

Show Battery Status: A toggle to display the battery status in the web application.

Manual Input Teleoperation: A toggle to enable or disable the manual teleoperation control input to replace the joystick.

Run with ROS Robot

SSH into the robot.

Open the GPS2IP iOS application. In the application settings, under “NMEA Messages to Send”, solely toggle “GLL”. Once set, toggle the “Enable GPS2IP” on the homepage of the application. Additionally, turn off the iOS auto-lock to prevent the application connection from being interrupted. Place the device on the robot.

Launch the necessary ROS launch file to run the vital nodes which include gps, img_res, and rostopiclist. You may use the sim.launch file for a simulation in Gazebo and real.launch for real world.

Run any other nodes that you desire.

Ensure the web client has the correct ROSBridge Server IP Address and Port set within the settings page. You can check this with the “current configurations” button.

If you want the camera to show up, make sure that the correct campera topic is set within the img_res node module. It can vary dependent upon the hardware. The two options are listed within the file with one commented out and the other active. Select one and comment out the other.

Enjoy the web client!

I, Brandon, had initially intended to work on this project independently but was later reached out to by Naimul and Jimmy to form the team as presently constructed. The project was initially framed as a simple remote teleoperation application in which a user could control the robot with an arrow pad. However, it has evolved into a more sophisticated product with the expertise added from my fellow teammates. The project is now boasted as a dashboard that leverages a joystick for a more intuitive control mechanism with a live camera feed and GPS localization which far exceeded the initial outline as a result of the additional requirements associated with a group of three individuals in a team. Pito proposed various ideas associated with campus rover and it was on our own accord that we chose to combine the dashboard with the GPS localization to create a strong command center for a user to control a robot of choice.

The team was constructed based upon a single common interest, participation within a final project that challenged our software engineering skills within a new domain that would have a long-term impact beyond this semester. It was coincidental that our individual areas of expertise balanced out our weaknesses. The team was balanced and all members were extremely active participants throughout weekly stand ups, discussions, and lab work.

Separation of Duties

Naimul Hasan

Throughout the semester, my responsibilities evolved according to the team's requirements. Initially, after Jimmy provided a visual representation of the previous dashboard, I designed an intuitive and user-friendly Figma layout. I then examined the code and relevant research, which led me to employ ROSLIBJS and RosBridge for the project. I dedicated a week to establishing a workflow standard for the team, including locking down the main branch and authoring a CONTRIBUTING.md document to ensure everyone understood and followed the desired workflow. After gaining an understanding of websocket communication between ROSLIBJS and ROSBridge, I focused on developing the Home page, which now features a video feed, map, and teleop joystick component. To address the issue of multiple websocket connections for each component, I transitioned to using a React Class Component with a global ROS variable. This enabled the components to subscribe and publish messages seamlessly. Additionally, I modified one of the ROSLIBJS dependencies to prevent app crashes and created a default settings configuration for simulation mode, which Jimmy later integrated with localStorage. The initial configuration consumed a significant portion of time, followed by the development of the map component. After exploring various options, such as react-leaflet and Google Maps, I chose Mapbox due to its large community and free API for GPS coordinates. Simultaneously, James and I resolved a dashboard crash issue stemming from localStorage data being empty and causing conversion errors. Collaborating with Brandon, I worked on integrating GPS data into the dashboard. We encountered a problem where the frontend couldn't correctly read the message sent as a string. After a week, we devised a solution in the Python code, converting the GPS data from Decimal Degrees and Minutes (DDM) to Decimal Degrees (DD). This adjustment enabled us to track movements on the map accurately. Additionally, I was responsible for deploying the app, initially attempting deployment to Netlify and Heroku. After evaluating these options, I ultimately settled on Azure, which provided the most suitable platform for our project requirements.

It is worth noting that regardless of whether the app is deployed locally, within the Kubernetes cluster on campus, or on Azure, the primary requirement for successful communication between the laptop/client and the robot is that they are both connected to the same network, since dashboard relies on websocket communication to exchange data and control messages with the robot, which is facilitated by the ROSLIBJS and ROSBridge components. Websocket connections can be established over the same local network, allowing for seamless interaction between the robot and the dashboard.

Jimmy Kong

During the beginning of the semester, my work started out as researching the old web client to see if we could salvage features. I was able to get the old web client working which helped us research and analyze the ways in which rosbridge worked and how we would have our web client connect to it as well. After we got a good foundational understanding from the web client, I started working on a settings page which would provide the user with a more convenient and customizable experience. The settings page went through multiple iterations before it became what it is today, as it started out very simple. The following weeks I would work on adding additional components such as the dropdown menu that shows the list of ros topics, the dropdown menu that shows the current settings configuration, the manual input teleoperation form that allows the user to manually input numbers instead of using the joystick, the robot battery status indicator that shows the battery status of the robot, clear button to clear the input boxes, reset to default button to clear locally stored values, placeholder component that displays a video error message, and last but not least dark mode for theming the website and for jokes since we basically wrapped up the project with a few days to spare. For the dropdown menu that shows the list of ros topics, I also had to create a python node that would send the “rostopic list” command and then use that output to then publish as a string separated by commas as a row topic. I would then subscribe to that topic and parse it via commas on the web client side for the rostopiclist button. After adding all these components, I also had to add the corresponding buttons (if applicable like dark mode or battery status) to the settings page to give the user the option to disable these features if they did not wish to use them. Inserting forms and buttons into the settings page made us have to think of a way to save the settings configurations somehow to survive new browser sessions, refreshes, and the switching of pages. To solve this issue, I utilized localStorage which worked great. I wrote logic for deciding when to use localStorage and when to use default values stored in our ros_configs file. After finishing the implementation of local storage, I also decided to add some form validation along with some quality of life error indicator and alert messages to show the user that they are putting in either correct or incorrect input. Aside from focusing on the settings page, I also handled the overall theming and color scheme of the website, and made various tweaks in CSS to make the website look more presentable and less of a prototype product. I also modified the about page to look a bit better and created a new help page to include instructions for running the web client. In summary, I was responsible for creating multiple components for the web client and creating the appropriate setting configuration options within the settings page, working on the aesthetics of the website, and creating the help page. Lastly, I also edited the demo video.

Brandon J. Lacy

The contribution to this project on my behalf can be broken down into two different phases: team management and technical implementation. I was responsible for the project idea itself and the formation of the team members. I delegated the duties of the project amongst the team members and led the discussions within our weekly discussions outside of the classroom. I also set the deadlines for our team to stay on track throughout the semester. The technical contribution revolves around the GPS implementation in which I selected the GPS receiver, an iPhone with the GPS2IP iOS application. I was responsible for the Python code to receive the data from the GPS receiver over the internet. Lastly, I was responsible for the node that changes the resolution of the image that is displayed on the web client.Lastly, I wrote 75% of the report and the entire poster.

Major Hurdles

Initially, the dashboard was hosted on Naimul's laptop for testing purposes, which allowed the team to evaluate its functionality and performance in a controlled environment. When we tried to deploy the dashboard on Netlify, we encountered several issues that hindered the deployment process and the app's performance.

One of the primary issues with Netlify was its inability to run a specific version of Node.js, as we want to avoid version mismatch, required by the dashboard. This limitation made it difficult to build the app using Netlify's default build process, forcing the us to manually build the app instead. However, even after successfully building the dashboard manually, the we faced additional problems with the deployment on Netlify. The app would not load the map component and other pages as expected, which significantly impacted the app's overall functionality and user experience.

As we were trying to find a new platform to host the dashboard so it can run 24/7 and pull down any changes from the main branch. Azure allowed us to download specific version of dependencies for the dashboard, while leaving overhead in resource availability if there is a lot of traffic. As mentioned above, regardless of whether the app is deployed locally, within the Kubernetes cluster on campus, or on Azure, the primary requirement for successful communication between the laptop/client and the robot is that they are both connected to the same network, since dashboard relies on websocket communication to exchange data and control messages with the robot, which is managed by the ROSLIBJS and ROSBridge components. Websocket connections can be established over the same local network, allowing for seamless interaction between the robot and the dashboard.

Major Milestones

Public Web Client hosting

Hosting the web client publically so that anyone can access the website with a link was a major milestone that we reached since just hosting through local host was not practical since its just for development usage and would not be very useful. More is explained within the major hurdle discussed above.

GPS

The implementation of the GPS was a major milestone in our project as it was the most difficult to design. When the project was initialized, there was no intention to incorporate GPS into the dashboard. However, the requirements for the project were increased when the team expanded to three people. The GPS portion of the project was dreaded, quite frankly, so when the Python code was completed for a ROS node that listened to the GPS data being published from the GPS2IP iOS application it was a huge celebration. The kicker was when the topic was subscribed to on the front-end and the visualization on the map was perfectly accurate and moved seamlessly with our movement across campus.

Dark Mode

Dark mode, a seemingly small feature, turned out to be a monumental milestone in our project. Its implementation was far from smooth sailing, and it presented us with unexpected challenges along the way. Little did we know that this seemingly simple concept would revolutionize the entire user experience and become the most crucial component of our project. Initially, we underestimated the complexity of integrating dark mode into our dashboard. We had to navigate through a labyrinth of CSS styles, meticulously tweaking each element to ensure a seamless transition between light and dark themes. Countless hours were spent fine-tuning color schemes, adjusting contrasts, and experimenting with different shades to achieve the perfect balance between aesthetics and readability. The moment dark mode finally came to life, everything changed. It was as if a veil had been lifted, revealing a whole new dimension to our dashboard. The sleek, modern interface exuded an air of sophistication, captivating users and immersing them in a visually stunning environment. It breathed life into every element, enhancing the overall user experience. It quickly became apparent that dark mode was not just another feature; it was the heart and soul of our project. The dashboard transformed into an oasis of productivity and creativity, with users effortlessly gliding through its streamlined interface. Tasks that were once daunting became enjoyable, and the project as a whole gained a newfound momentum. In fact, we can boldly assert that without dark mode, our dashboard would be practically unusable. The blinding glare of the bright background would render the text illegible, and the stark contrast would induce eye strain within minutes. Users would be left squinting and frantically searching for a pair of sunglasses, rendering our carefully crafted functionalities utterly useless. Dark mode's significance cannot be overstated. It has redefined the essence of our project, elevating it to new heights. As we reflect on the struggles, the countless lines of code, and the sleepless nights spent perfecting this feature, we cannot help but celebrate the impact it has had. Dark mode, the unsung hero of our project, has left an indelible mark on our journey, forever changing the way we perceive and interact with our dashboard.

We sought a deeper understanding of the inter-process communications model of ROS to be able to allow our web application to take advantage of this model and we’ve successfully developed this knowledge. Additionally, it allowed us to integrate our software development knowledge with ROS to create a complex system which will draw attention to our respective resumes. We are proud of the product created in this course and see it as a major improvement from the previous implementation of the dashboard. The only aspect in which we are not content is the lag present on the camera feed and joystick. However, there are areas for improvement in which we recommend to be addressed by the next team to tackle this project. The lag issue previously described is one of them as it decreases usability of the product. It would also be useful to create a map of the campus with way points in which the GPS can be utilized to create a path from one place to another. There are a variety of improvements to be made to this application to continue to shape campus rover into an implemented product. It’s up to the next generation of students to decide where to take it next.

The idea of the autopilot robot was simple – it’s a mobile robot augmented with computer vision, but it’s a fun project to work on for people wishing to learn and combine both robotics and machine learning techniques in one project. Evenmore, one can see our project as miniature of many real world robots or products: robot vacuum (using camera), delivery robot and of course, real autopilot cars; so this is also an educative project that can familiarize one with the techniques used, or at least gives a taste of the problems to be solved, for these robots/products.

Able to follow a route

Able to detect traffic signs

Able to behave accordingly upon traffic signs

What was created

Technical descriptions, illustrations

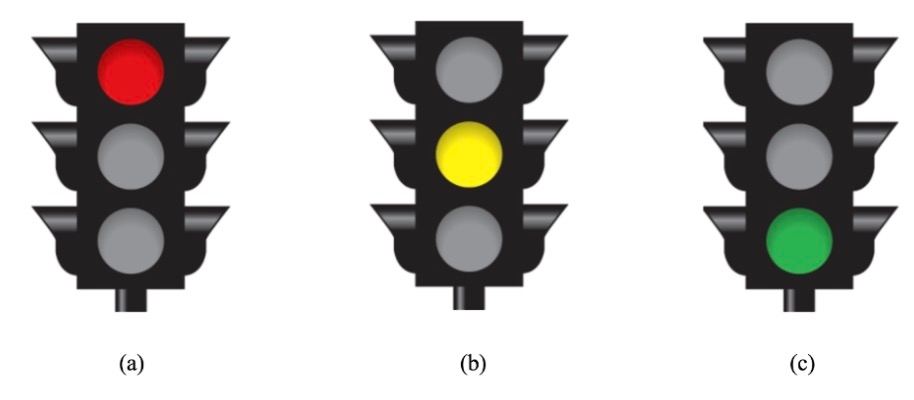

There are five types and two groups of traffic signs that our robot can detect and react to. Here are descriptions of each of them and the rules associated with them upon the robot’s detection.

Traffic light (See Fig1)

Red light: stops movement and restarts in two seconds.

Orange light: slows down

Green light: speeds up

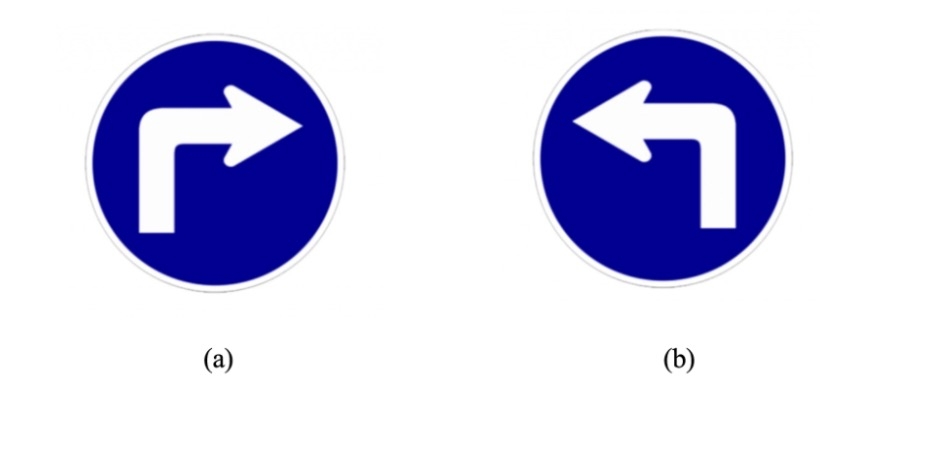

Turn signs (See Fig2)

Left turn: turn left and merge into the lane

Right turn: turn right and merge into the lane

All turns are 90 degrees. The robot must go straight and stay in the lane if not signaled to turn.

2. Discussion of interesting algorithms, modules, techniques

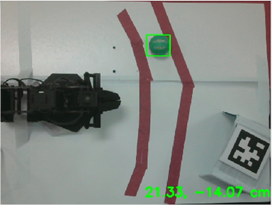

The meat of this project is computer vision. We soon realized that different strategy can be used the two groups of traffic signs as they have different types of differentiable attributes (color for traffic lights and shape for turning signs).Therefore, we used contour detection for traffic lights, and we set up a size and circularity threshold to filter noise out noise. Turning signs classification turned out to be much harder than we initially expected in the sense that the prediction results seem to be sometimes unstable, stochastic, and confusing (could be because of whatever nuance factors that differ in the training data we found online and the testing data (what the robot sees in lab).

We tried SVM, Sift, and CNN, and we even found a web API for traffic signs models that we integrated into our project – none of them quite work well.

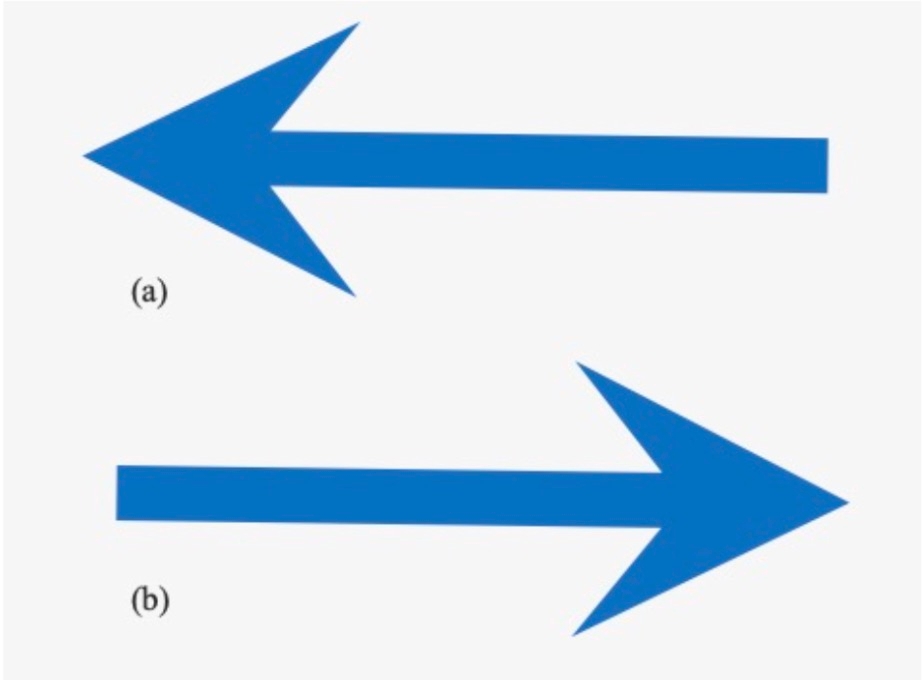

Therefore we decided to pivot to another more straightforward approach: we replaced our original realistic traffic signs (Fig2a) with simple arrows (Fig2b), and then what we are able to do is that:

Detect the blue contour and crop the image from robot’s camera to a smaller size

Extract the edges and vertices of the arrow, find the tip and centroid of it

Classify the arrow’s direction based on the relative position of the tip to the centroid

Two more things worth mentioning on lane following:

Our robot follows a route encompassed by two lines (one green and one red) instead of following one single line. Normally the robot uses contour detection to see the two lines and then computes the midpoint of them. But the problem comes when the robot turns – in this case, the robot can only see one colored line. The technique we use is to only follow that line and add an offset so that the robot turns back the the middle of the route (the direction of the offset is based on the color of that line)

How we achieve turning when line following is constantly looking for a midpoint of a route. What we did is that we published three topics that are named “/detect/lane/right_midpoint”, “/detect/lane/midpoint”, “/detect/lane/left_midpoint”. In this case there are multiple routes (upon an intersection), the robot will choose to turn based on one of midpoint position, based on the presence turning sign. By default, the robot will move according to the “/detect/lane/midpoint” topic.

Table 1. Nodes and Messages description

Story of the project

How it unfolded, how the team worked together

At the very beginning of our project, we set these three milestones for our work:

And we have been pretty much following this plan, but as one can tell, the workload for each phase is not evenly distributed.

Milestone 1 is something we’ve already done in assignment, but here we use two lines to define a route instead of just one single line, and the complexity is explained above. Traffic light classification was pretty straightforward in the hindsight. We simply used color masking and contour detection. To avoid confusing the robot between red green lines that define the route, and the red green traffic signs, we set up a circularity and area threshold. We spent the most time on milestone 3. We experimented with various methods and kept failing until we decided to simply our traffic signs – more details are explained in section 8. Our team worked together smoothly. We communicated effectively and frequently. As there are three of us, we divided the work into lane detection and following, traffic light classification, and sign classification and each of us works on a part to maximize productivity. After each functionality is initially built, we worked closely to integrate them and debug together.

problems that were solved, pivots that had to be taken

The first problem or headache we encountered is that we use a lot of colors for object detection in this project, so we had to spend a great amount of time tuning HSV values of our color-of-interest, as slight changes in background lighting/color mess up with it.

The more challenging part is that, as alluded to above, turning signs classification is more difficult than we initially expected. We tried various model architectures, including CNN, SVM, SIFT, and linear layers. No matter what model we choose, the difficulty comes from the inherent instability of machine learning models. Just to illustrate it: we found a trained model online that was trained on 10000 traffic signs images, for 300 epochs, and reached a very low loss. It is able to classify various traffic signs – stop sign, rotary, speed limit, you name it. But sometimes, it just failed on left turn and right turn classification (when the image is clear and background is removed), and we didn’t figure out a great solution on remediating this. So instead we piloted away from using machine learning models and replaced curved traffic signs (Fig 2a) with horizontal arrows (Fig 2b). The detail of the algorithm is discussed in section 4.

Your own assessment

We think that this has been a fun and fruitful experience working on this project. From this semester’s work, we’ve all had a better appreciation of the various quirky problems in robotics – we had to basically re-pick all the parameters when we migrated from gazebo world the the real lab, and also we literally had to re-fine-tune the hsv values everytime we switched to a new robot because of the minute difference in background lighting and each robot’s camera. So if one thing we learned from this class, it’s real Robots Don't Drive Straight. But more fortunately, we’ve learned a lot more than this single lesson, and we’re able to use our problem solving and engineering skills to find work-arounds for all those quirky problems we encountered. Therefore, in summary we learned something from every failure and frustration, and after having been through all of these we’re very proud of what we achieved this semester.

Benjamin Blinder benjaminblinder@brandeis.edu

Ken Kirio kenkirio@brandeis.edu

Vibhu Singh vibhusingh@brandeis.edu

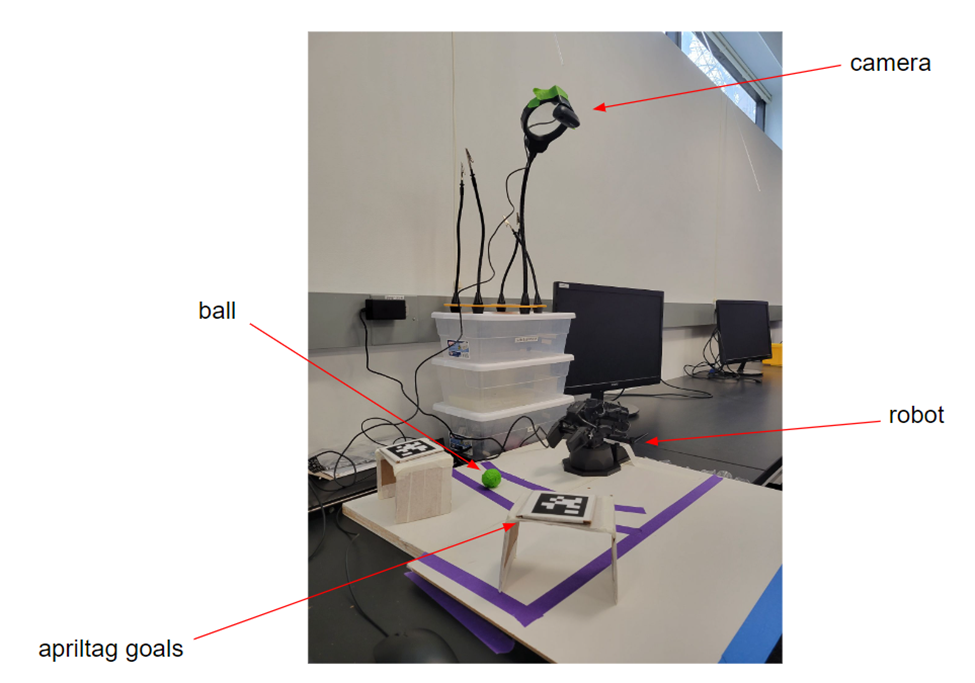

The project coordinates a stationary arm robot and a mobile transportation robot to load and unload cargo autonomously. We imagine a similar algorithm being used for loading trucks at ports or in factory assembly lines.

There are three phases: mapping, localization, and delivery.

First, the robot drives around and makes a map. Once the map is made, a person will manually drive the robot to a delivery destination and press a button to indicate its location to the robot. Then the person will manually have to drive the robot back to the loading point and mark that location as well. With this setup, the robot can start working as the delivery robot and transporting cubes from the loading zone to the arm.

In the delivery phase, a person loads a piece of cargo - we used a 3D printed cube - onto the robot and then presses a button to tell the robot that it has a delivery to make. Once there, computer vision is used to detect the cargo and calculate its position relative to the arm. Once the arm receives the coordinates, it determines what command would be necessary to reach that position and whether that command is physically possible. If the coordinates aren’t valid, the transportation robot attempts to reposition itself so that it is close enough for the arm. If the coordinates are valid, the arm will then grab the cube off the loading plate on the robot and place it in a specified go. Finally, the transportation robot will go back to the initial home zone, allowing the process to repeat.

Mapping

Create a map

Localize on a premade map

A flowchart of message passing that must occur between the three main components of this project.

We needed the robot to be able to move autonomously and orient itself to the arm, so we decided to use SLAM to create a map and the AMCL and move_base packages to navigate. Using SLAM, we use the LiDAR sensor on the robot to create a map of the room, making sure we scan clear and consistent lines around the arm and the delivery zone. We then save the map and use AMCL to generate a cost map. This also creates a point cloud of locations where the robot could be according to the current LiDAR scan. Then, we manually moved the robot through the map in order to localize the robot to the map and ensure accurate navigation. We then capture the position and orientation data from the robot for a home and delivery location, which is then saved for future navigation. Finally, we can tell the robot to autonomously navigate between the two specified locations using the move_base package which will automatically find the fastest safe route between locations, as well as avoid any obstacles in the way.

Cargo is detected via color filtering. We filter with a convolutional kernel to reduce noise, then pass the image through bitwise operators to apply the color mask. The centroid is converted from pixels to physical units by measuring the physical dimensions captured by the image.

Since commands to the arm are sent in polar coordinates, the cartesian coordinates obtained from the image are then converted into polar. These coordinates must then be transformed into the units utilized by the arm, which have no physical basis. We took measurements with the cargo in various positions and found that a linear equation could model this transformation, and utilized this equation to determine the y-coordinate. However, the x-coordinate could not be modeled by a linear equation, as the arm's limited number of possible positions along this axis made it impossible to determine a strong relationship between the coordinates. In addition, whether the cargo was parallel or at an angle to the arm affected the location of the ideal point to grab on the cargo. Instead we calculated the x-position by assinging the position and orientation of the cargo to states, which were associated with a particular x-coordinate.

Arm robot

The arm robot needs to be elevated so that it can pick up the cube. Ensure that the setup is stable even when the arm changes positions, using tape as necessary. Determine the z-command required to send the arm from the "home" position (see ) to the height of the cargo when it is loaded on the transportation robot. This will be one parameter of the launch file.

Camera

The camera should be set in a fixed position above the loading zone, where the lens is parallel to the ground. The lab has a tripod for such purposes. Record the resolution of the image (default 640x480) and the physical distance that the camera captures at the height of the cargo when placed on the transportation robot. These will also be parameters for the launch file.

Run roslaunch cargoclaw transport_command.launch. Separately, run roslaunch cargoclaw arm_command.launch with the following parameters:

arm_z: z-command from the arm's position to pick up the cargo from the transportation robot (Setup #1)

width_pixels/height_pixels: Image resolution (Setup #2)

width_phys/height_phys: Physical dimensions captured in the image at the height of the cargo (Setup #2)

Mode 1: Mapping Use teleop to drive the robot between the loading and unloading zones. Watch rviz to ensure adequate data is being collected.

Mode 2: Localizing Use teleop to drive the robot between the loading and unloading zones. Watch rviz to ensure localization is accurate. Press the "Set Home" button when the robot is at the location where cargo will be loaded. Press the "Set Goal" button when the robot is at the location of the arm.

Mode 3: Running When the cargo is loaded, press "Go Goal". This will send the robot to the loading zone where the loading/unloading will be done autonomously. The robot will then return to the home position for you to add more cargo. Press "Go Goal" and repeat the cycle. The "Go Home" button can be used if the navigation is not working, but should not be necessary.

Nodes

Messages

We began by trying to send commands to the robotic arm through ROS. While the arm natively runs proprietary software, Veronika Belkina (hyperlink?) created a package that allows for ROS commands to control the arm which we decided to utilize. The arm is controlled via USB so first we had to set up a new computer with Ubuntu and install ROS. Our first attempt was unsuccessful due to an unresolved driver error, but our second attempt worked. This step took a surprising amount of time, but we learned a lot about operating systems while troubleshooting in this stage.

Simultaneously, we researched the arm capabilities and found that the arm can pick up a maximum weight of 30g. This required that we create our own 3D printed cubes to ensure that the cargo would be light enough. All cubes were printed in the same bright yellow color so that we could use color filtering to detect the cube.

Next, we tested our code and the cubes by coding the arm to pick up a piece of cargo from a predetermined location. This step was difficult because commands for the x/z-coordinates are relative, while commands for the y-coordinate are absolute. After this test worked, we made the node more flexible, able to pick up the cargo from any location.

With the arm working, we then began working on the image processing to detect the cargo and calculate its location. First we had to set up and calibrate the USB camera using the usb_cam package. Then we began working on cargo detection. Our first attempt used color filtering, but ran into issues due to the difference in data between the USB camera and the robots' cameras. We then tried applying a fiducial to the top of the transportation robot and using the aruco_detect package. However, the camera was unable to reliably detect the fiducial, leading us to return to the color detection method. With some additional image transformation and noise reduction processing, the color detection was able to reliably identify the cargo and report its centroid. Then, since the height of the transportation robot is fixed we were able to calculate the physical location of the cargo based on the ratio of pixels to physical distance at that distance from the camera. Using this information, we created another node to determine whether the cube is within the arm's range to pick up. We found converting the physical coordinates to the commands utilized by the arm to be extremely difficult, ultimately deciding to take many data points and run a regression to find a correlation. With this method we were relatively successful with the y-coordinate, which controlled the arm's rotation.

Simultaneously, we coded the transportation robot's autonomous navigation. We realized our initial plan to combine LIDAR and fiducial data to improve localization would not work, as move_base required a cost map to calculate a trajectory while fiducial_slam saved the coordinates of each detected fiducial but did not produce a cost map. We decided to move forward by using slam_gmapping, which utilized lidar data and did produce a cost map. This allowed us to use move_base to reach the approximate location of the arm, and fine-tune the robot's position using fiducial detection as necessary. However, upon testing, we realized move_base navigation was already very accurate, and trading control between the move_base and a fiducial-following algorithm resulted in unexpected behavior, so we decided to just use move_base alone.

Finally, we attempted to combine each of these components. Although move_base allowed for good navigation, we found even slight differences in the orientation of the robot in the delivery zone resulted in differences in the coordinates necessary to grab the cargo. We decided to make the image processing component more robust by pivoted to using polar rather than cartesian coordinates when calculating the commands to send to the arm, as the arm's x- and y-coordinates correspond to its radius and angle. We then used different approaches for transforming the x- and y-coordinates into commands. The arm had very limited range of motion along its x-axis, making it practical to measure the cargo's location at each position and pick the x-command which most closely corresponded to the cargo's location. However, the robot had a much greater range along its y-axis, leading us to take measurements and perform a cubic regression to get an equation of the command for a given y-coordinate. In addition, we added further tuning after this step, as the cargo's orientation required that the arm move increasingly along its y-axis to pick up a piece of cargo that is angled compared to the arm's gripper.

Working as a team was very beneficial for this project. Although we all planned the structure of the project and overcame obstacles as a team, each person was able to specialize in a different area of the project. We had one person work on the arm, another on image processing, and another on the transportation robot.

Team Members: Michael Jiang (michaeljiang@brandeis.edu) & Matthew Merovitz (mmerovitz@brandeis.edu)

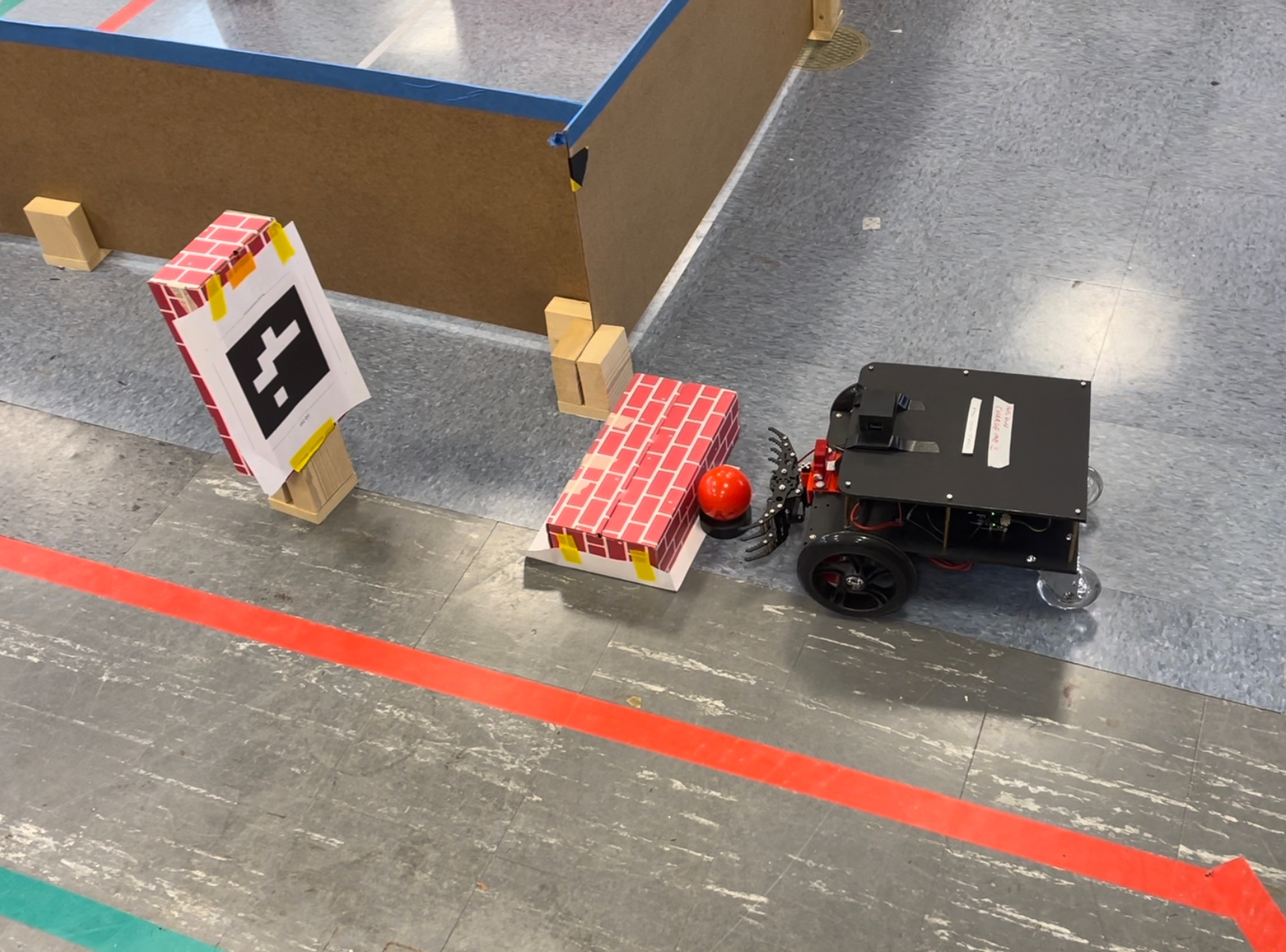

The project aimed to create a robot that could mimic the game of bowling by grabbing and rolling a ball towards a set of pins. The robot was built using a Platform bot with a pincer attachment. The ball is a lacrosse ball and the “pins” are either empty coffee cups or empty soda cans.

At first, we imagined that in order to achieve this task, we would need to create an alley out of blocks, map the space, and use RGB for ball detection. None of this ended up being how we ultimately decided to implement the project. Instead, we opted to use fiducial detection along with line following to implement ball pickup and bowling motion. The fiducial helps a lot with consistency and the line following allows the robot to be on track.

The primary objective of the BowlingBot project was to develop a robot that could perform the task of bowling, including locating the ball, grabbing it, traveling to the bowling alley, and knocking down the pins with the ball. The project aimed to use a claw attachment on the Platform bot to grab and roll the ball towards the pins. The robot was designed to use fiducial markers for ball detection and orientation, and line detection for pin alignment.

The BowlingBot project utilized several algorithms to achieve its objectives. These included:

Fiducial Detection: The robot was designed to use fiducial markers to detect the location and orientation of the ball. The fiducial markers used were ArUco markers, which are widely used in robotics for marker-based detection and localization. In addition, we used odometry to ensure precision, allowing us to consistently pick up the ball.

Line Detection: The robot utilized line detection techniques to align itself with the pins. This involved using a camera mounted on the robot to detect the yellow line on the bowling alley and align itself accordingly. This also uses OpenCV.

Pincer Attachment: The BowlingBot utilized a pincer attachment to pick up the ball and roll it towards the pins.

The code is centered around a logic node that controls the logic of the entire bowling game. It relies on classes in fiducialbowl.py, pincer.py, bowlingmotion.py.

Fiducialbowl.py: This class has two modes. Travel mode is for traveling to the ball in order to pick it up and then traveling back to the bowling alley. Bowling mode is for ensuring centering of the robot along the line with the assistance of the yellow line.

Pincer.py: Controls the opening and closing of the pincer attachment on the platform bot.

Bowlingmotion.py: This node turns until the yellow line is seen, centers the robot along the line, and then performs the quick motion of moving forwards and releasing the ball.

During our time developing the BowlingBot, we encountered several obstacles and challenges that we were forced to overcome and adjust for. Initially, the crowded lab hours slowed down our camera and sensors, peaking at about a minute delay between the camera feed and what was happening in real time. We decided collectively to instead come during office hours when it was less crowded in order to avoid the severe network traffic, which allowed us to test the camera properly.

Another roadblock was finding an appropriate ball, which held up our testing of the bowling motion. We knew we needed a ball that was heavy enough to knock over pins, but small enough to fit inside the pincer. We initially bought a lacrosse ball to test with, but it was too grippy and ended up catching on the ground and rolling under the robot, forcing us to pivot to using a field hockey ball, which was the same size and weight but crucially was free from the grippiness of the lacrosse ball.

Another challenge we had to adapt to was the actual bowling motion. We initially proposed that the robot should implement concepts from the line follower so that it could adjust its course while rolling down the alley. However, we discovered that the robot’s bowling motion was too quick for it to reliably adjust itself in such a short time. To resolve this, we pivoted away from adjusting in-motion and instead implemented aligning itself as best as possible before it moves forward at all.

The BowlingBot project was inspired by the team's love for robotics and bowling. The team was intrigued by the idea of creating a robot that could mimic the game of bowling and we spent lots of time researching and developing the project.

The project was challenging and required us to utilize several complex algorithms and techniques to achieve our objectives. We faced several obstacles, including issues with ball detection and alignment, and had to continuously iterate and refine our design to overcome these challenges.

Despite the challenges, the team was able to successfully develop a functional BowlingBot that could perform the task of bowling. We are excited to showcase our project to our peers and hold a demonstration where participants can compete against the robot.

Overall, the BowlingBot project was a success, and the team learned valuable lessons about robotics, problem-solving, and combining several class concepts into a more complex final product.

For our team's COSI119a Autonomous Robotics Term Project, we wanted to tackle a challenge that was not too easy or not hard. All of our members have only recently learned ROS, so to create a fully demo-able project in 8 weeks sounded impossible. We started off having a few ideas: walking robot that follows an owner, robot that plays tag, and a pet robot that accompanies one. There were a lot of blockers for those ideas from limitation of what we know, tech equipments, time constraints, and mentor's concern that it will not be able to. We had to address issues like:

Names: Isaac Goldings (isaacgoldings@brandeis.edu), Jeremy Huey (jhuey@brandeis.edu), David Pollack (davidpollack@brandeis.edu)

Instructor: Pito Salas (rpsalas@brandeis.edu)

Date: 05/03/2023

#!/usr/bin/env python

'''

A module with a GPS node.

GPS2IP: http://www.capsicumdreams.com/gps2ip/

'''

import json

import re

import rospy

import socket

from std_msgs.msg import String

class GPS:

'''A node which listens to GPS2IP Lite through a socket and publishes a GPS topic.'''

def __init__(self):

'''Initialize the publisher and instance variables.'''

# Instance Variables

self.HOST = rospy.get_param('~HOST', '172.20.38.175')

self.PORT = rospy.get_param('~PORT', 11123)

# Publisher

self.publisher = rospy.Publisher('/gps', String, queue_size=1)

def ddm_to_dd(self, degrees_minutes):

degrees, minutes = divmod(degrees_minutes, 100)

decimal_degrees = degrees + minutes / 60

return decimal_degrees

def get_coords(self):

'''A method to receive the GPS coordinates from GPS2IP Lite.'''

# Instantiate a client object

with socket.socket(socket.AF_INET, socket.SOCK_STREAM) as s:

s.connect((self.HOST, self.PORT))

# The data is received in the RMC data format

gps_data = s.recv(1024)

# Transform data into dictionary

gps_keys = ['message_id', 'latitude', 'ns_indicator', 'longitude', 'ew_indicator']

gps_values = re.split(',|\*', gps_data.decode())[:5]

gps_dict = dict(zip(gps_keys, gps_values))

# Cleanse the coordinate data

for key in ['latitude', 'longitude']:

# Identify the presence of a negative number indicator

neg_num = False

# The GPS2IP application transmits a negative coordinate with a zero prepended

if gps_dict[key][0] == '0':

neg_num = True

# Transform the longitude and latitude into decimal degrees

gps_dict[key] = self.ddm_to_dd(float(gps_dict[key]))

# Apply the negative if the clause was triggered

if neg_num:

gps_dict[key] = -1 * gps_dict[key]

# Publish the decoded GPS data

self.publisher.publish(json.dumps(gps_dict))

if __name__ == '__main__':

# Initialize a ROS node named GPS

rospy.init_node("gps")

# Initialize a GPS instance with the HOST and PORT

gps_node = GPS()

# Continuously publish coordinated until shut down

while not rospy.is_shutdown():

gps_node.get_coords()#!/usr/bin/env python

'''

A module for a node that alters the quality of the image.

'''

import cv2

import numpy as np

import re

import rospy

from cv_bridge import CvBridge

from sensor_msgs.msg import CompressedImage

from std_msgs.msg import String

class Compressor:

''''''

def __init__(self):

'''Initialize necessary publishers and subscribers.'''

# Instance Variables

# Initialize an object that converts OpenCV Images and ROS Image Messages

self.cv_bridge = CvBridge()

# Image Resolution

self.img_res = {

'height': 1080,

'width': 1920

}

# Publisher - https://answers.ros.org/question/66325/publishing-compressed-images/

# self.publisher = rospy.Publisher('/camera/rgb/image_res/compressed', CompressedImage, queue_size=1)

self.publisher = rospy.Publisher('/raspicam_node/image_res/compressed', CompressedImage, queue_size=1)

# Subscribers

# self.subscriber_cam = rospy.Subscriber('/camera/rgb/image_raw/compressed', CompressedImage, self.callback_cam, queue_size=1)

self.subscriber_cam = rospy.Subscriber('/raspicam_node/image/compressed', CompressedImage, self.callback_cam, queue_size=1)

self.subscriber_set = rospy.Subscriber('/image_configs', String, self.callback_set, queue_size=1)

def callback_cam(self, msg):

'''A callback that resizes the image in line with the specified resolution.'''

# Convert CompressedImage to OpenCV

img = self.cv_bridge.compressed_imgmsg_to_cv2(msg)

# Apply New Resolution

img = cv2.resize(img, (self.img_res['height'], self.img_res['width']))

# Convert OpenCV to CompressedImage

msg_new = self.cv_bridge.cv2_to_compressed_imgmsg(img)

# Publish

self.publisher.publish(msg_new)

def callback_set(self, msg):

'''A callback to retrieve the specified resolution from the web client.'''

img_set = re.split(',', msg.data)

self.img_res['height'] = int(img_set[0])

self.img_res['width'] = int(img_set[1])

if __name__ == "__main__":

rospy.init_node("img_comp")

comp = Compressor()

rospy.spin()#!/usr/bin/env python

import rospy

from std_msgs.msg import String

import subprocess

def get_ros_topics():

"""

Returns a list of active ROS topics using the `rostopic list` command.

"""

command = "rostopic list"

process = subprocess.Popen(command.split(), stdout=subprocess.PIPE)

output, error = process.communicate()

topics = output.decode().split("\n")[:-1] # remove empty last element

return topics

def rostopic_list_publisher():

"""

ROS node that publishes the list of active ROS topics as a string message.

"""

rospy.init_node('rostopic_list_publisher', anonymous=True)

pub = rospy.Publisher('/rostopic_list', String, queue_size=10)

rate = rospy.Rate(1) # 1 Hz

print("[INFO] Robot.py node has been started!")

while not rospy.is_shutdown():

1 topics = get_ros_topics()

message = ",".join(topics)

pub.publish(message)

rate.sleep()

if __name__ == '__main__':

try:

rostopic_list_publisher()

except rospy.ROSInterruptException:

passPerform autonomous navigation between two fixed points

Image processing

Recognize the cargo

Calculate the physical location of the cargo

Robotic arm

Coordinate commands on a robot that does not run ROS natively

arm_cam

Accepts image of the delivery zone from the camera, detects the cargo with color filtering, and calculates and publishes its physical location

box_pickup

Accepts the coordinates published from arm_cam and uses a linear regression to determine the y transform and a set of ranges to determine the x transform

cargo_bot

Directly controls the motion of the Alien Bot using cmd_vel and move_base

cargo_gui

User interface which allows control of the robot as well as setting the mapping and navigation points

alien_state

True if the transportation robot has finished navigating to the delivery zone. Published by `cargo_bot` and subscribed to by `arm_cam`.

cargo_point

The physical position of the cargo. Published by `arm_cam` and subscribed to by `box_pickup`.

UI

A string of information relaying the state, position, and time since the last input for the robot which is published to `cargo_gui` for display

keys

A string that directs robot movement. Published from `cargo_gui` to `cargo_bot`.

arm_status

A string relaying whether the location of the cargo can be picked up by the arm, and if so, when the arm has finished moving the cube. This informs the transportation robot's movement in the delivery zone. Published by `box_pickup` and subscribed to by `cargo_bot`.

Run “roslaunch autopilot autopilot.launch”, which starts the four nodes used in our project.

Clear description and tables of source files, nodes, messages, actions

YES

Midpoint of a lane

/detect/lane/right_midpoint

MidpointMsg

YES

Midpoint of a left lane (when there’s a branch or cross)

/detect/lane/left_midpoint

MidpointMsg

YES

Midpoint of a right lane (when there’s a branch or cross)

/detect/sign

SignMsg

[string direction

float32 width

float32 height]

YES

Detection of the

/detect/light

LightMsg

[string color

float32 size]

YES

Detection of a traffic light

Cmd_vel

Twist

NO

Publish movement

/raspicam_node/image/compressed

CompressedImage

NO

Receive camera data

/detect/lane/midpoint

MidpointMsg

[int32 x

int32 y

int32 mul]

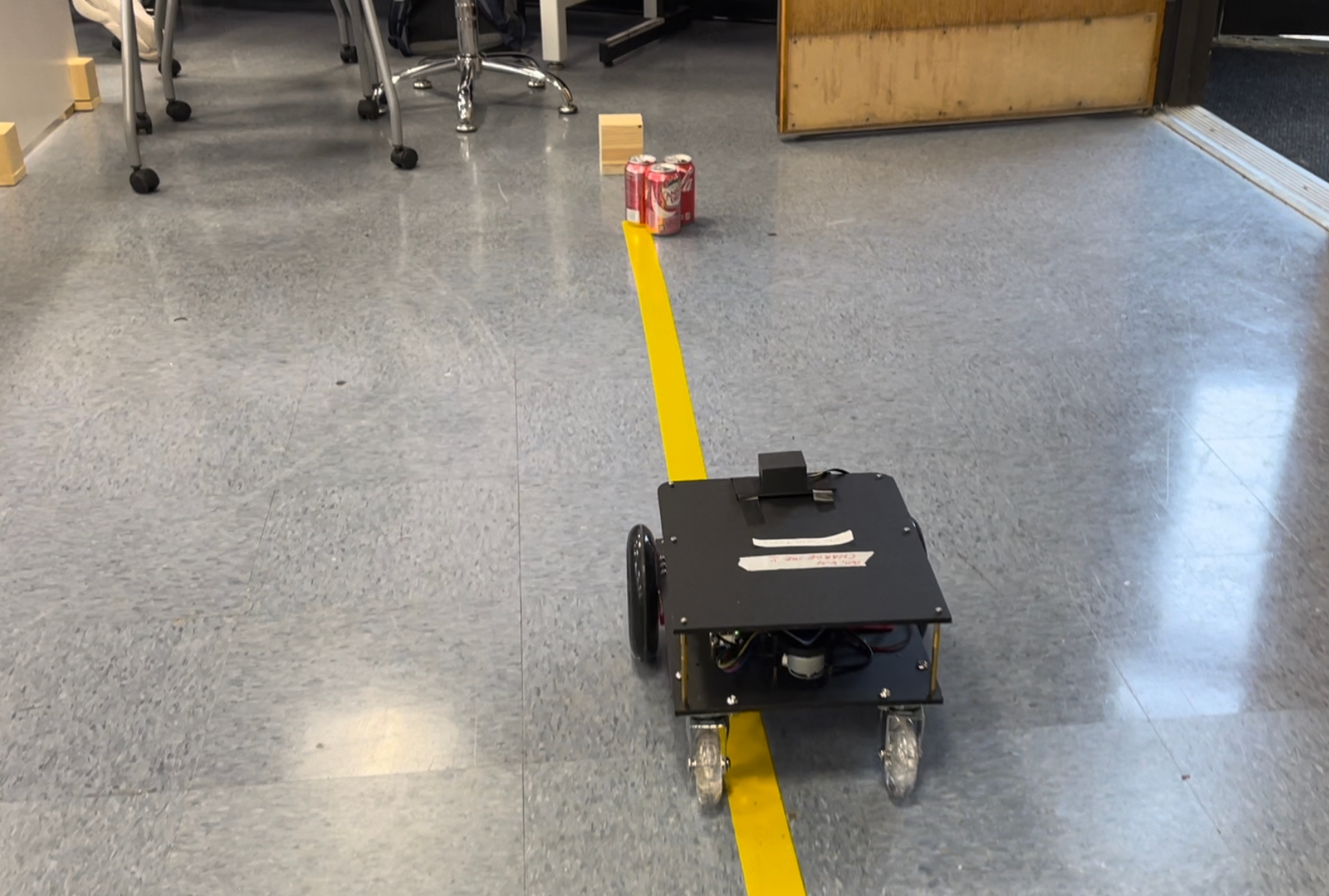

In the end, we decided to work on a robot that guards an object and stop an intruder from invading a specified region. We pivoted from having one robot to having multiple robots so that they are boxing out the object that we are protecting. We tested a lot of design decisions where we wanted to see which method would give us the desired result with the most simple, least error prone, and high perfomring result.

There were a lot of learnings throughout this project, and while even during the last days we were working to deploy code and continue to test as there is so much that we want to add.

Video demo: https://drive.google.com/drive/folders/1FiVtw_fQFKpZq-bJSLRGotYXPQ-ROGjI?usp=sharing

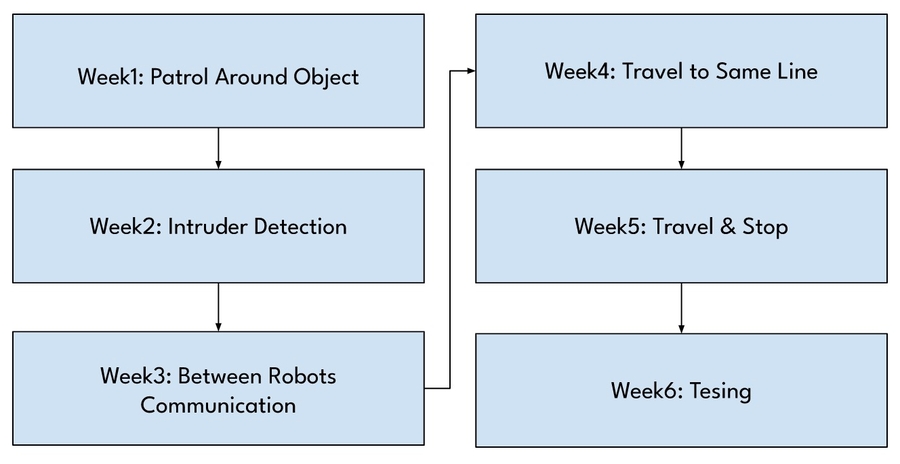

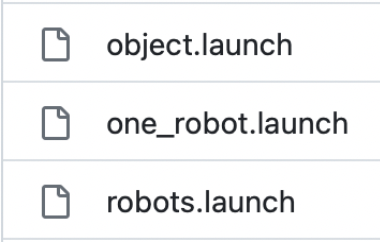

As we will be approaching this project with an agile scrum methodology, we decided to take the time in developing our user stories, so that at the end of the week during our sprint review we know if we are keeping us on track with our project. After taking a review of the assignment due dates for other assignments of the class, we thought that having our own group based deadlines on Friday allows us to make these assignments achievable on time. Below is a brief outline of our weekly goals.

Here is a ⏰ timeline ⏳ , we thought were were going to be able to follow:

March 3: Finish the Movie Script That Explains Story of Waht we want to do and Proposal Submission

March 10: Figure out if tf can be done in gazebo

March 17: Run multiple real robots

March 24: Detect the object

March 31: Aligning to be protecting it - circle or square like formation

April 7: Detecting if something is coming at it

April 14: Making the robot move to protect at the direction that the person is coming at it from

April 21: Testing

April 28: Testing

May 3: Stop writing code and work on creating a poster

May 10 Continuing to prepare for the presentation

Here is a timeline of what we actually did:

March 3: Finished all Drafting and Proposal - Submitted to get Feedback and Confirmation That it is Good To Go (Karen, LiuLu, Rongzi)

March 10: Figure out if tf can be done in gazebo (Rongzi), Creating Github Repo (Karen), Drawing Diagrams (Liulu), Explore Gazebo Worlds - Get Assests and Understand Structure (Karen)

March 17: Run multiple real robots on Gazebo (Karen), Created multi robot gazebo launch files (Karen), Wrote Code on Patroling Line (Liulu), Create box world (Rongzi), Make Behavior Diagram (Karen)

March 24: Continue to write code on patroling the line for multirobot (Liulu), Explored fiducials to integrate (Karen), Made better Gazebo world (Rongzi)

March 31: Aligning to be protecting it - circle or square like formation

April 7: Detecting if something is coming at it

April 14: Making the robot move to protect at the direction that the person is coming at it from

April 21: Testing

April 28: Testing

May 3: Stop writing code and work on creating a poster

May 10 Continuing to prepare for the presentation

Here is another breakdown of the timeline that we actually followed:

To address blockers, we had to start asking ourselves questions and addressing issues like:

What are the major risky parts of your idea so you can test those out first?

What robot(s) will you want to use?

What props (blocks, walls, lights) will it need?

What extra capabilities, sensors, will your robot need? How you have verified that this is possibler?

And many other questions.

Here was the original plan of what we wanted to display:

Though things do not often go to plan as we did not realize that step 2 to step 3 was going to be so much harder as there was all these edge cases and blockers that we discovered (concurrency, network latency, camera specs). We ended up really only focusing on the patrolling and block stages.

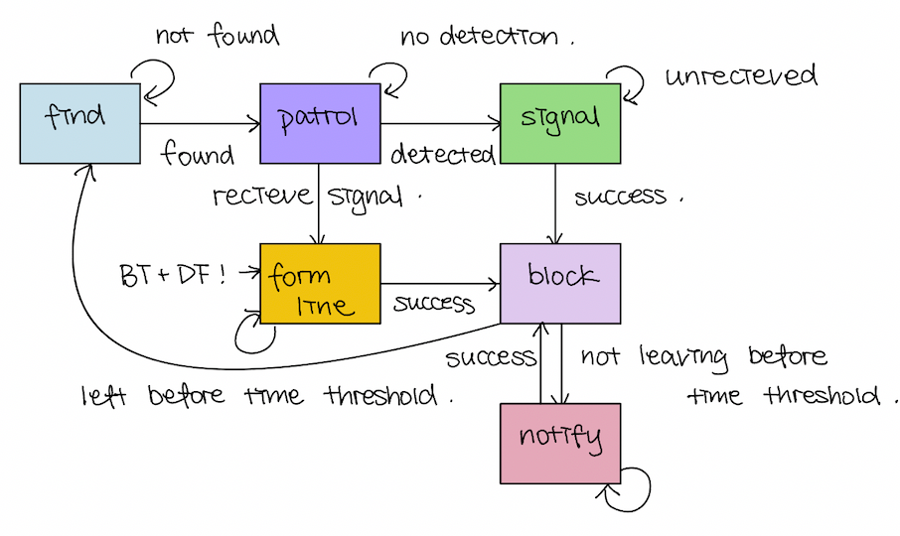

There are many states to our project. There is even states withhin states that need to be broken down. Whenever we were off to coding, we had to make sure that we were going back to this table to check off all the marks about control of logic. We were more interested in getting all the states working, and so we did not use any of the packages of state management. Another reason why we did not proceed with using those state pacakges was because we needed to have multiple files as one python file represented one node so we were not able to be running multiple robot through one file.

Here are the states that we were planning to have.